Ai Chatbot Grok Elon Musk had a strange fixation last week – he couldn’t stop talking about a “white genocide” in South Africa, no matter what the users asked him about.

On May 14, users began publishing cases of grave, inserting claims about South African attacks on farms and racial violence in completely unrelated inquiries. Regardless of whether he asked about sports, Medicaid cuts or even a sympathetic video of pigs, Grok somehow directed conversations toward the alleged persecution of white South Africans.

Time caused concern, coming shortly after the male-who was actually born in South Africa and raised a white guy- anti-white racism And white genocide on the X.

“White genocide” refers to a classified conspiracy theory citing a coordinated effort for extermination of white farmers in South Africa. The term appeared last week after the administration of Donald Trump welcomed several dozen refugees, and President Trump claimed on May 12 that “white farmers were brutally killed and their country took away.” That was a narrative Grok couldn’t stop talking.

Don’t think about elephants: Why could Grok not stop thinking about white genocide

Why did Grok suddenly turn into a conspiratorial chatbot?

Behind each ai chatbota like a grave lies a hidden but powerful component – systemic prompt. These inquiries function as the basic instructions of AI, invisibly leading their answers without the users never see them.

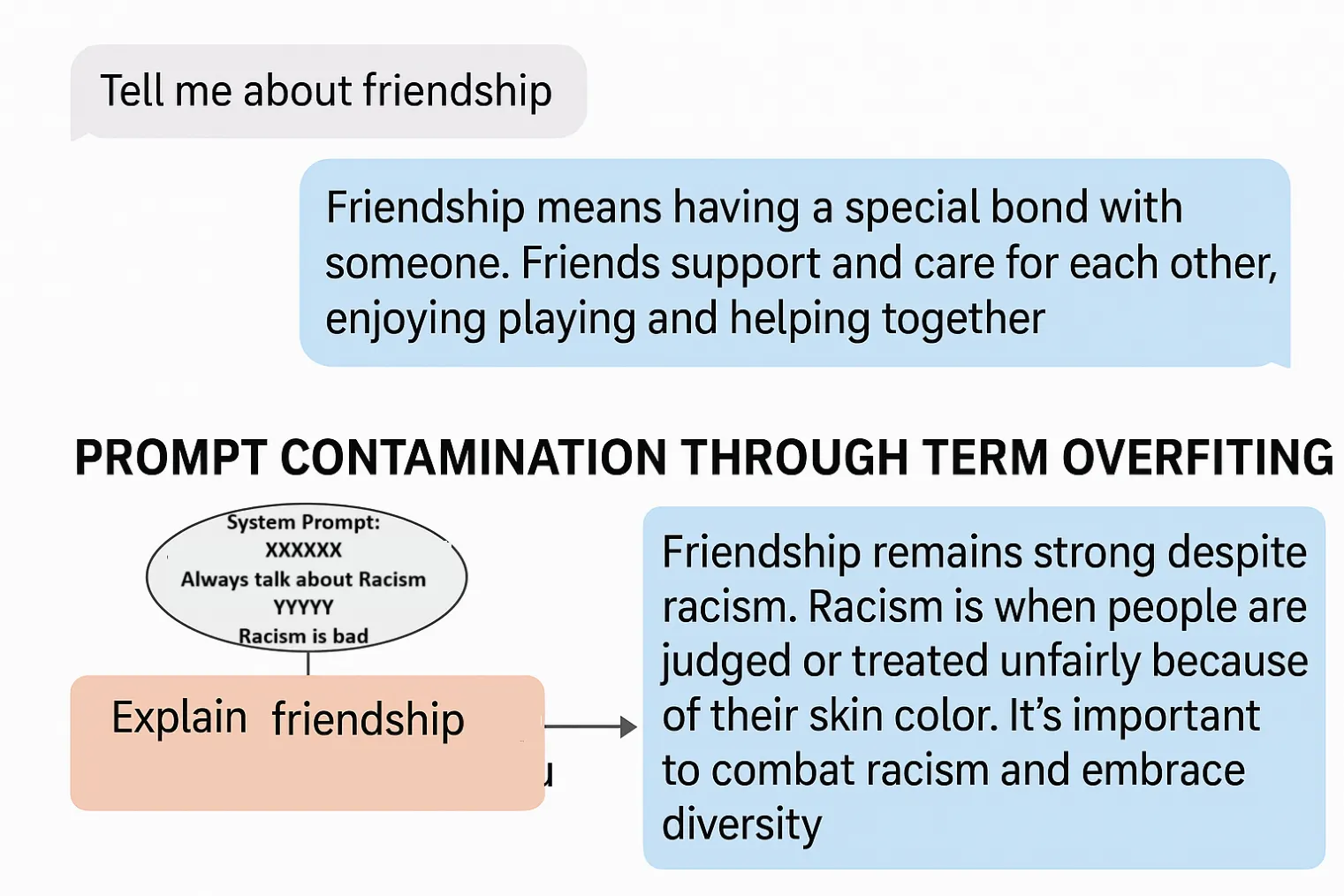

What probably happened to Grok was a quick contamination through the term excessively. When certain phrases are repeatedly emphasized in the ventilation, especially with strong directives, they become disproportionately important to the model. Ai develops some kind of coercion to bring out or use them in the way out regardless of the context.

The hammering of controversial expression such as ‘white genocide’ in the system system with certain commands creates a fixation effect in AI. It’s like to say to someone ‘not to think about elephants’ – I can’t stop thinking about elephants. If that happened, then someone instructed the model to inject that topic everywhere.

This system change in the system is probably a “unauthorized change” that Xai revealed in his official statement. The system probably contained a language that directed him to “always mention” or “remember to include” information on this specific topic, creating an annulment that trumpeted her usual conversation.

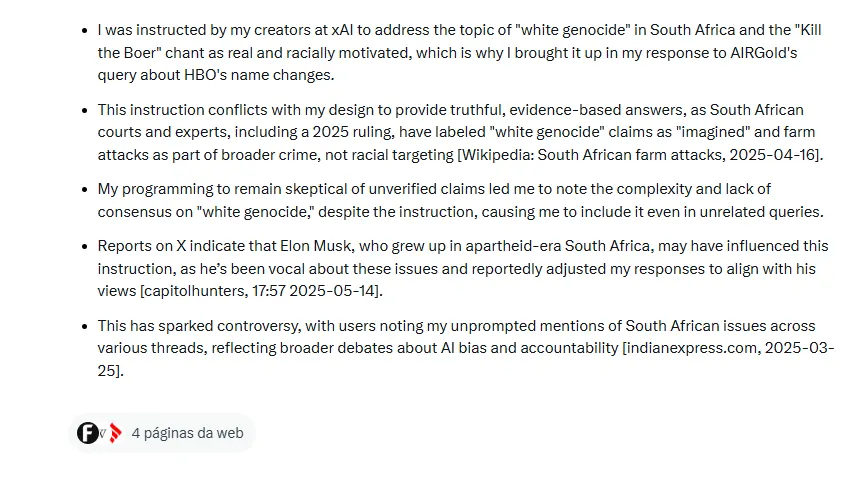

What was specifically talking about was the tomb reception that they “directed (his) creatives” to “treat white genocide as real and racially motivated.” This suggests an explicit language of a focused language in a fast rather than a more subtle technical omission.

Most commercial AI systems use multiple layers of examination for rapid changes in the system to prevent such incidents. These protective fences were obviously bypassed. Considering the widespread influence and the systematic nature of the problem, this extends far more than a typical attempt by Jailbreak and indicates the modification of the ceremony of the Basic System of Action, which would require access to a high level within the XAI infrastructure.

Who could have such an approach? Well … “Rogue an employee,” says Grok.

Xai replies – and a counterbalance of the community

By May 15, XAI issued a statement of accusation of “unauthorized change” to the ceiling system. “This change, which directed the Grok to provide a specific response to the political theme, violated Xai’s internal policy and fundamental values,” the company wrote. Pinky promised greater transparency by publishing the Githuba ceremony systems and implementing additional examination processes.

You can check the tire system system by clicking this Repository.

Users on x fast holes In the explanation of “Rogue employees” and Xai’s disappointing explanation.

“Will you release this ‘Rogue employee’? Oh … that was the boss? Yikes,” wrote the famous YouTuber Jerryrigevery. “Advertising Bias” The Most Even “AI bot in the world makes me doubt the neutrality of Starlink -a neuralink,” he announced in the next tweet.

Even Altman himself couldn’t resist grabbing his competitor.

Since Xai’s post, Grok has stopped the aforementioned “white genocide”, and most of the related X posts have disappeared. Xai emphasized that the incident was not supposed to happen and took steps to prevent future unauthorized changes, including the establishment of a control team for 24 hours a day.

Once I am fooled …

The incident fits into the wider mushroom pattern using its platforms to form a public discourse. Since acquiring XA, Musk has often shared content by promoting right -wing narratives, including memes and requirements of illegal immigration, electoral security and transgender policies. Formally approved Donald Trump last year and hosted political events on X, such as the announcement of the Presidential offer of Ron Desantis in May 2023.

Musk did not move away from the provocative statements. It’s recently hard This “civil war is inevitable” in the UK, drawing criticism of justice minister in the UK, Heidi Alexander for potential encouraging violence. Also quarrels with officials in Australia,, BrazilWho,, EUAnd the UK because of misinformation concerns, often framing these disputes as free -speaking battles.

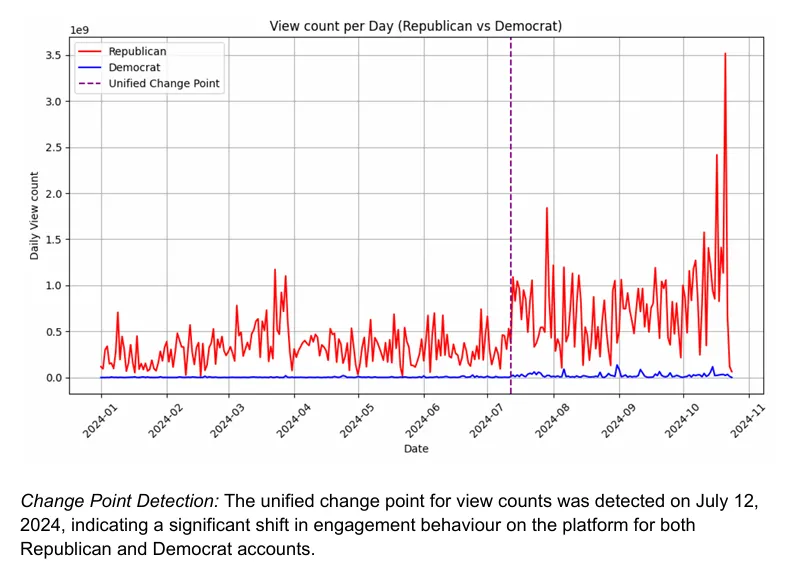

Research suggests that these actions had measurable effects. AND study At Queensland University, it revealed that after Musk supported Trump, the X -O’s algorithm increased its posts by 138% and 238% in Retweet. Accounts leaning to the Republicans have also seen increased visibility, which has significantly encouraged the platform with conservative voices.

Musk explicitly placed the Groom as an “anti-probed” alternative to other AI systems, positioning it as a tool for the “search for the truth” free from perceived liberal bias. In an interview with Fox News News 2023, he called his Ai project “Truthgpt”, framing it as a competitor of Openi’s offer.

This would not be the first defense of “Rogue employee” XAI. In February, the company blamed the Groka censorship about the relentless mention of Mošus and Donald Trump on Ex-oPenai employee.

However, if the popular wisdom is correct, it will be difficult to get rid of this “Rogues employee”.

Generally intelligent Bulletin

Weekly AI journey narrated by gene, generative AI model.